How to Get Google to Index Your Website

Google's indexing process is complicated, with many phases affecting each other.

To get them to index your website quickly, you need to make sure there are no roadblocks preventing Google from indexing in the first place.

Secondly, do whatever you can to notify Google that you have fresh content and want your website to be indexed. Bear in mind that the quality of your content, and the lack of internal links may a deal-breaker in the indexing process.

Finally, boost your website's popularity by building external links to your website and getting people to talk about your content on social media.

If your content isn’t on Google, does it even exist?

For your websiteWebsite

A website is a collection of HTML documents that can be called up as individual webpages via one URL on the web with a client such as a browser.

Learn more to be visible on the dominant search engineSearch Engine

A search engine is a website through which users can search internet content.

Learn more, it first needs to be indexed. In this article, we’ll show you how to get Google to indexIndex

An index is another name for the database used by a search engine. Indexes contain the information on all the websites that Google (or any other search engine) was able to find. If a website is not in a search engine’s index, users will not be able to find it.

Learn more your site quickly and efficiently and what roadblocks to avoid hitting.

Google’s indexing process in a nutshell

Before diving into how to get your website indexed, let’s go over a simplified explanation of how Google’s indexing process works.

Google’s index can be compared to a massive library – one that’s larger than all the libraries in the world combined!

The index contains billions and billions of pages, from which Google picks the most relevant ones when users make search queries.

With this much content that keeps on changing, Google must constantly search for new content, content that’s been removed, and content that’s been updated – all to keep its index up-to-date.

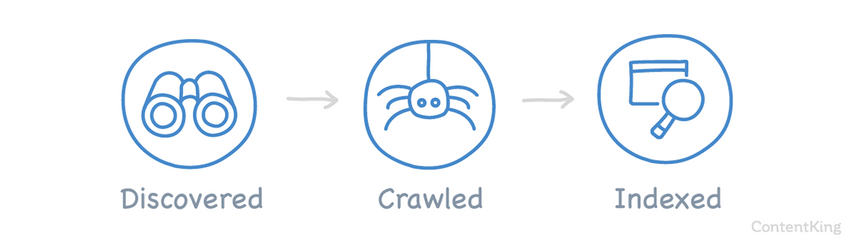

In order for Google to rank your site, it first needs to go through these three phases:

- Discovery: By processing XML sitemaps and following links on other pages Google already knows about, the search engine discovers new and updated pages and queues them for crawling.

- Crawling: Google then goes on to crawl each discovered pagePage

See Websites

Learn more and passes on all the information it finds to the indexing processes. - Indexing: Among other things, the indexing processes handle content analysis, render pages and determine whether or not to index them.

The interconnectedness of Google’s indexing system

Google’s indexing process is highly complex, with lots of interdependencies among the steps included in the process. If some part of the flow goes wrong, that affects other phases as well.

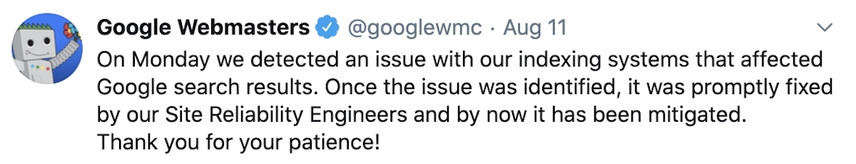

For instance, on August 10, 2020, the SEO community noticed a flurry of changes in the search results ranking . Many argued that this meant Google was rolling out a significant update. But the next day, Google announced that it was in fact caused by a bug in their indexing system that affected rankings:

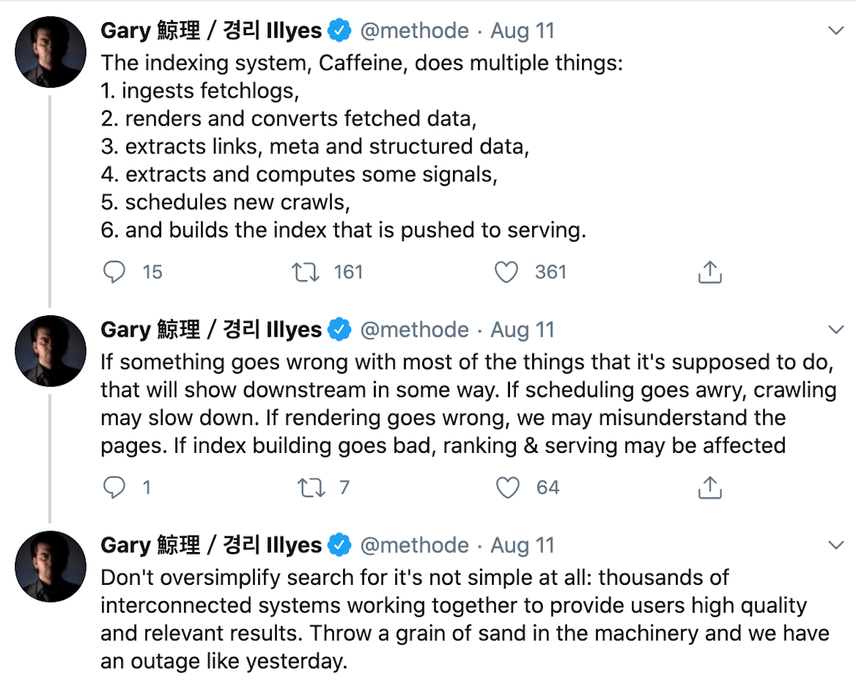

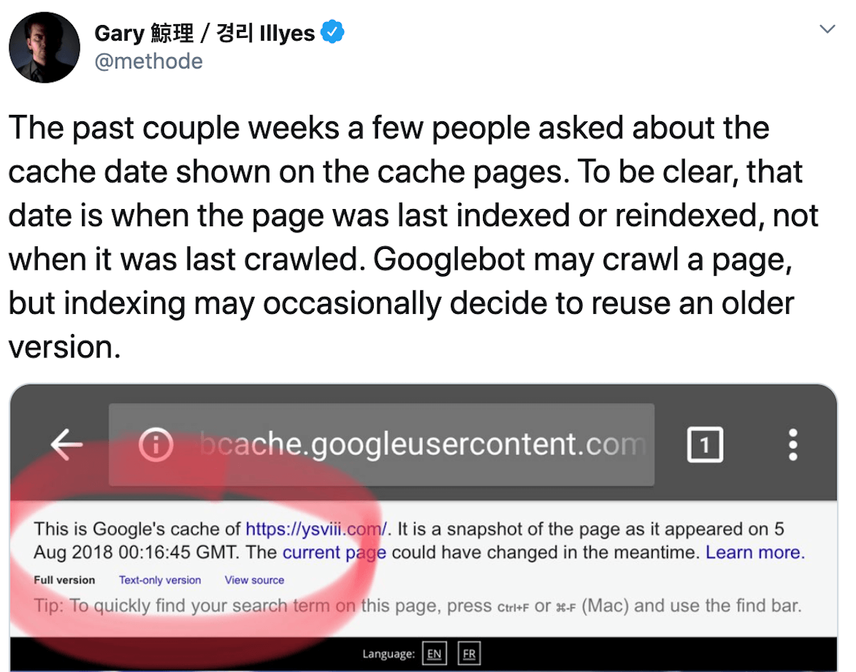

To shed some light on how the indexing process is complicated and intertwined, Garry Illyes explained the Caffeine workflow in a Twitter thread :

This tweet suggests that a bug in the indexing phase can have a big effect on the process that follows it – in this case messing up the ranking system.

Alongside this event, it’s important to note that in May 2020, Google underwent a broad core update that impacted the indexing process. Since then, Google has been slower to index new content and is more picky about the content it decides to index. It seems as if its quality-filtering process has become a lot stricter than it previously was.

How to check if Google has indexed your website?

There are several quick ways to check whether Google has indexed your website, or whether they're still stuck in the preceding phases discovery and crawling.

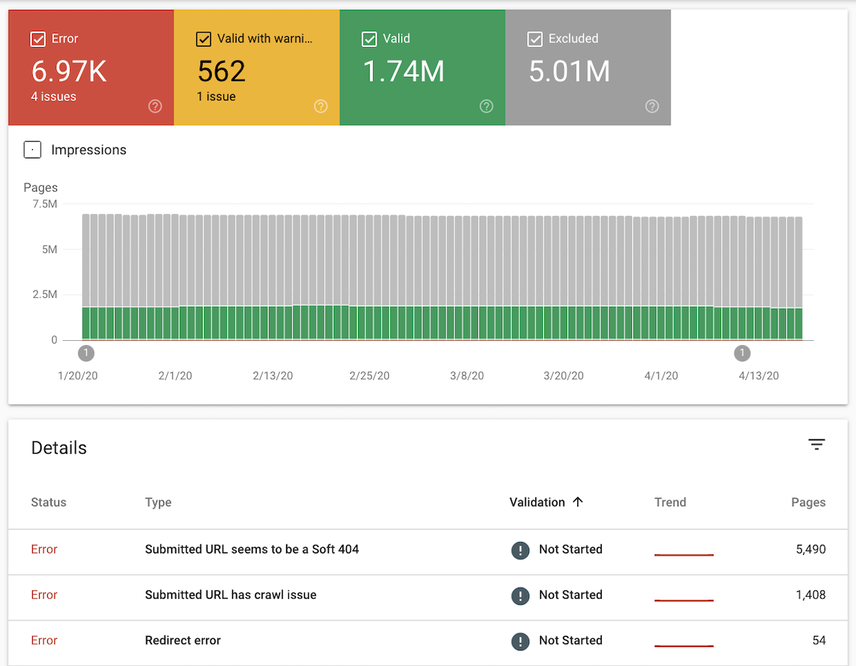

Feedback from Google Search Console

Use Google Search Console’s Index Coverage Report to get a quick overview of your website’s indexing status. This report provides feedback on the more technical details of your site’s crawling and indexing process.

The report returns four kinds of statuses:

- Valid: these pages were indexed successfully.

- Valid with warnings: these pages were indexed, but there are some issues you may want to check out.

- Excluded: these pages weren’t indexed, as Google picked up clear signals that they shouldn’t index them.

- Error: Google could not index these pages for some reason.

Checking your website’s Index Coverage report

- Log on to Google Search Console.

- Choose a property.

- Click

CoverageunderIndexin the left navigation.

Here’s an example of what an Index Coverage report for a large website looks like:

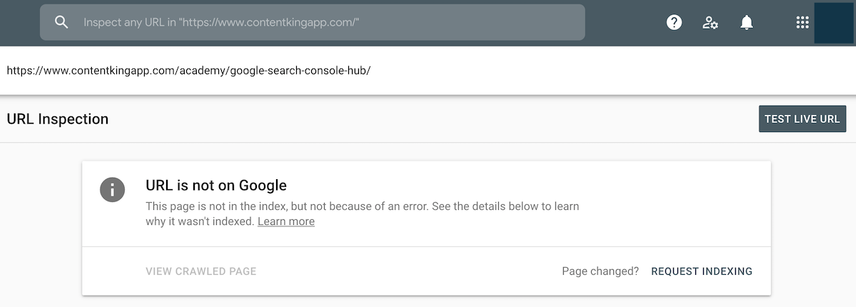

The Index Coverage report lets you quickly check your site’s overall indexing status, and meanwhile, you can use Google Search Console’s URL Inspection tool to zoom in on individual pages.

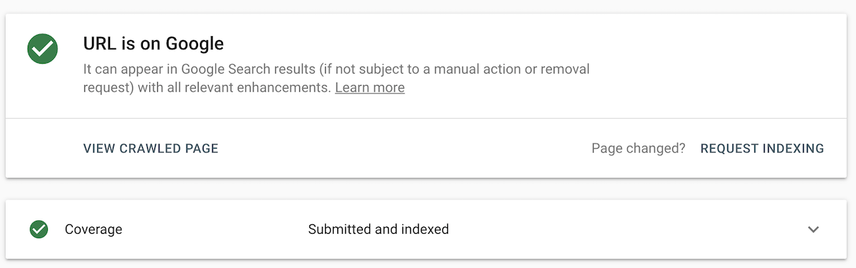

Check your URL in Google Search Console

- Log on to Google Search Console.

- Choose a property.

- Submit a URL from the website you want to check.

Next, you’ll see something like this:

If the URLURL

The term URL is an acronym for the designation "Uniform Resource Locator".

Learn more Inspection tool shows you the URL isn’t indexed yet, you can use the very same tool to request indexing.

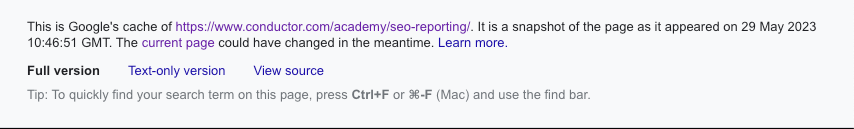

Check the URL’s cache

Check whether your URL has a cached version in Google, either by typing cache:https://example.com into Google or the address bar, or by clicking the little arrow pointing downwards under the URL on a SERP.

If you see a result, Google has indexed your URL. Here’s an example for one of our articles:

The date included in the screenshot refers to the last time the website was indexed. Keep in mind that it doesn’t say anything about when it was last crawled. The website may have been crawled again later without Google indexing its updates, as Garry Illyes pointed out in this tweet .

At the same time, checking a URLs cache isn't foolproof either — you may see a cached page even though — in the meantime — the page has been removed from Google's index.

If it ranks, it’s indexed

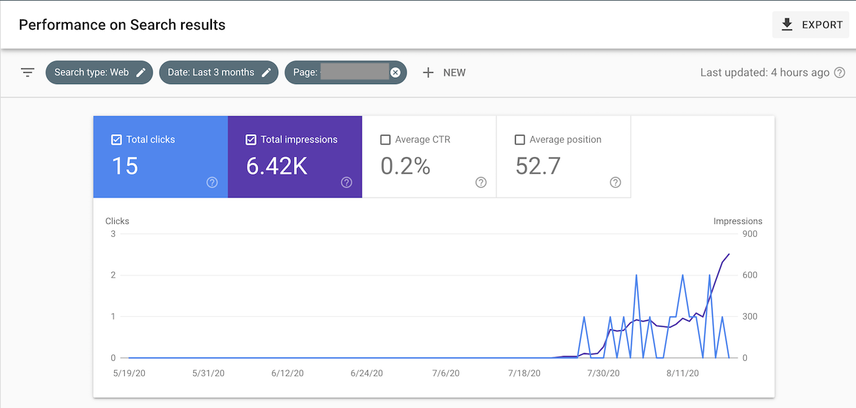

Another way to verify if your pages have been indexed is to check whether they are ranking using a rank tracker, or simply by checking Google Search Console’s Performance data to see if you’re getting clicks and impressions:

Use Performance data to determine index status

- Log on to Google Search Console.

- Choose a property.

- Click

Search resultsunderPerformanceon the left hand side. - There, filter on the page you’re looking for by clicking the filter at the top. By default it opens with the setting

URLs containing. Fill in the URL(s) you’re looking for.

Next, you’ll see something like this:

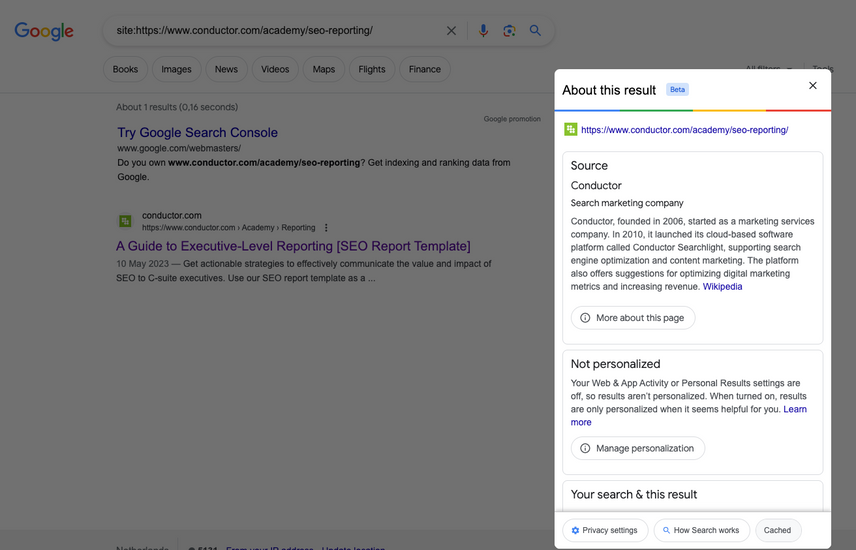

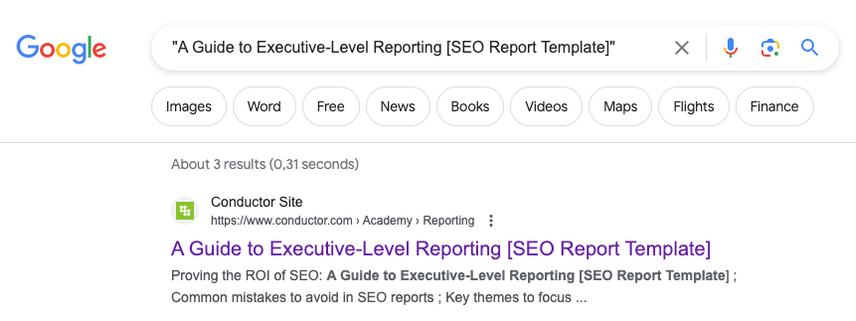

Searching for the exact page title or URL

Alternatively, to see if a page is indexed, you can search for the exact page title by putting it in between quotes (”Your page’s title”), use the intitle: search operator with your page's title (intitle:"Your page's title") or just enter the URL into Google.

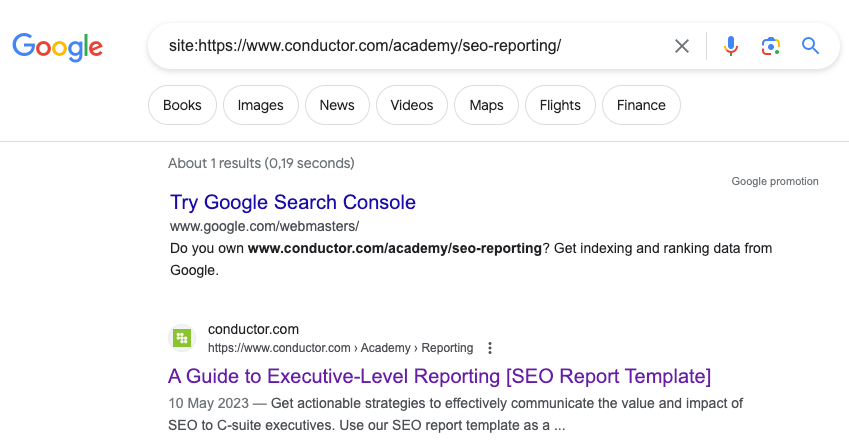

site: queries

You can also check out if your page is indexed by using the site: query for the page. Here’s an example: entering https://www.conductor.com/academy/seo-reporting/ can show whether that page is indexed.

However, this approach is not always reliable!

We’ve seen instances where pages are ranking, but they aren’t showing up for site: queries. So never rely on this check alone.

How to get Google to index your website quickly

Here's how to get Google to quickly index your website:

1. Provide Google with high-quality content only

This should be the number one goal of your content. Not only will it help you get indexed faster, but Google’s aim is to return high-quality content to its users as quickly as possible. Therefore, always focus on providing Google with the best content you can possibly produce to increase your chances of being indexed quickly.

With Google’s strict content evaluation and never-ending competitionCompetition

Businesses generally know who their competitors are on the open market. But are they the same companies you need to fight to get the best placement for your website? Not necessarily!

Learn more, creating and optimizing great content is a process that will never cease.

Apart from generating new content, focus on improving what’s already in place. Update underperforming content so that it returns better answers to potential visitors. If you have low-quality or outdated content on your website, consider either removing it completely or discouraging Google from spending its precious crawl budget on it.

The process of cutting off outdated, irrelevant, or simply too low-quality content is called content pruning. By getting rid of the dead weight, you’re creating room for other content – that does have potential – to flourish, providing Google with only the best content your website has to offer.

2. Prevent duplicate content

Another way to turn Google’s crawl budget into a massive waste is to have duplicate content. This term refers to very similar, or identical, content that appears on multiple pages within your own website, or on other websites.

Overall, duplicate contentDuplicate Content

Duplicate content refers to several websites with the same or very similar content.

Learn more can be truly confusing for Google. On principle, Google indexes only one URL for each unique set of content. But it’s hard for the search engine to determine which version to index, and this is subsequently reflected in their search results. And as the identical versions keep on competing against each other, it lowers performance for all of them.

Duplicate content can turn into a harsh problem, mainly for eCommerce website owners, who have to find a way to signal to Google which parts of their website to index and which to keep hidden.

To this end, you can use robots.txtRobots.txt

Robots.txt file is a text file that can be saved to a website’s server.

Learn more disallow for filters and parameters, or you can implement canonicalized URLs. But as mentioned in the first part of this article, be very careful what you are implementing, as even a tiny change can have a negative impact.

3. Prevent robots directives from impacting indexing

A common reason why Google doesn't index your content is because of the robots noindex directive. While this directive helps you prevent duplicate content issues, it sends Google a strong signal not to index certain pages on your website. Meta robots directives can be implemented through the HTML source, and the HTTP header.

In your HTML source, the meta robots tag may look something like this:

<meta name="robots" content="noindex,follow" />.

Only implement them on pages you definitely don’t want to be indexed, and in case a page you want to be indexed is having indexing issues, double check if the noindex directive isn’t implemented.

4. Set up canonical tags correctly

Although canonical tags aren’t as strong a signal as meta robots directive, their incorrect use can lead to indexing issues. Make sure the pages you want to get indexed aren’t canonicalized.

One thing I've seen is sites that get so caught up in ensuring their pages canonicalize, end up canonicalizing to pages that are also marked with noindex.Google needs clear, consistent signals, so canonicalizing your content to a page marked noindex could stop the affected pages' performance in their tracks.

5. Don’t disallow content you want to get indexed

The robots.txt file is an important tool that sends signals to all search engines about the crawlability of your URLs. It can be set to let Google know it should ignore certain parts of your website.

Make sure that the URLs you want to be indexed aren’t disallowed in robots.txt. Messing up your robots.txt can lead to new content and content updates not being indexed. Be aware that anyone can make mistakes in the robots.txt file – even big companies such as Ryanair .

To check what pages are blocked by robots.txt, check the “Indexed, though blocked by robots.txt” report in Google Search ConsoleGoogle Search Console

The Google Search Console is a free web analysis tool offered by Google.

Learn more.

Disallowing a URL in robots.txt doesn’t necessarily mean that the site will disappear from Google Search. The website might appear in SERPs, but with a bad snippet.

The robots.txt file may be simple to use, but is also quite powerful in terms of causing a big mess. I've seen many cases where websites were "ready to go" and were pushed live with a Disallow: /.

Resulting in all pages being blocked for search engines, and nobody being able to find the website through Google Search. Meanwhile, the client starts to wonder why Google isn't indexing anything. One line of code can pass unnoticed, and block Google from finding all your website's content!

6. Prevent crawler traps and optimize crawl budget

To make sure you get the most out of Google crawling your website, avoid creating crawler traps. Crawler traps are structural issues within a website that results in crawlersCrawlers

A crawler is a program used by search engines to collect data from the internet.

Learn more finding a virtually infinite number of irrelevant URLs, in which the crawlers can get lost.

You should make sure that the technical foundation of your website is on-par, and that you are using proper tools that can quickly detect crawler traps Google may be wasting your valuable crawl budget on.

The biggest cause for crawler traps on websites today comes from faceted navigation and filters for pricing or size (especially if you can select multiple). If you don't hide these URLs from Google, you can easily create millions of extra URLs from only a few pages. Remember that Google can follow both regular links and JavaScript links and that URLs are case sensitive.

My advice: make sure that all URL variations that need to be blocked off, truly are blocked off!

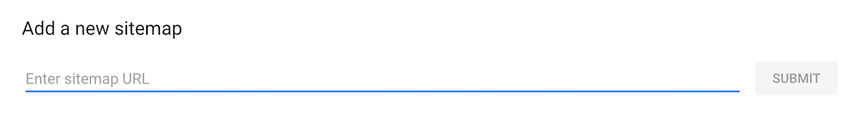

7. Feed Google indexable content through a XML sitemap

Once you are sure there is no blockage on your side, you should make it easy for Google to discover your URLs and to understand your website’s infrastructure in general. XML sitemaps are a great way to do this.

All newly published content or updated content that needs to be indexed should be added to your XML sitemap(s) automatically. To make your content easy for Google to find, submit your XML sitemap(s) to Google Search Console.

- Sign in to Google Search Console.

- Select the property for which you want to submit a sitemap.

- Click

SitemapsunderIndexon the left-hand side. - Submit the XML Sitemap URL.

Google will then regularly check your submitted XML sitemap for new content to discover, crawl and —hopefully— index.

If you have a large website, keep in mind that XML sitemaps should be limited to max 50,000 URLs. It’s better to generate more XML sitemaps with fewer URLs each than to exceed that limit.

Oliver Mason has described an XML sitemap strategy in which he limited the amount of URLs to 10,000 and organized them chronologically – with sitemap1.xml containing the oldest pages, and sitemap14.xml containing the most recent pages.

This led to improved indexing. It seems it pays off to feed Google smaller amounts of URLs and to group new content together.

My go-to way of getting anything indexed quickly is always to verify the site in Search Console and then submit the XML sitemap there.

Always make sure your XML sitemap has all the pages you want to have indexed in it, and organized so Google can read them — with sitemap indexes if required.

For me this has been the best way to knock on Google's door to let them know they can tour through the website ASAP and crawl/index everything found there and you can always check back to see when it was submitted and last read by Google.

8. Manually submit your URLs to Google Search Console

While Google will discover, crawl, and potentially index your new or updated pages on its own, it still pays to give it a push by submitting URLs into Google Search Console. This way, you can also speed up the ranking process.

You can submit your URLs in GSC's URL inspector:

- Sign in to Google Search Console

- Select a website for which you want to submit a URL

- Submit the URL to the upper part of Google Search Console

- Check if the URL is indexable by clicking the

TEST LIVE URLbutton.

- Click the

REQUEST INDEXINGbutton. - Do the same for pages that link to the page you want Google to index.

Please note that pages may be indexed and yet not ranking. For example, if you request indexing in Google Search Console (GSC), your pages will quickly be indexed, but they won't rank right away. However, this will generally speed up the whole process.

9. Submit post through Google My Business

Submitting a post through Google My BusinessGoogle My Business

Google My Business is a product by the Google search engine.

Learn more gives Google an extra push to crawl and index URLs that you've included there. We don't recommend doing this just for any post, and keep in mind that this post will be shown in the Google My Business knowledge panel on the right hand side for branded searches.

- Sign in to Google My Business

- Choose the location you want to submit a post for.

- Click

Create postand choose theWhat's Newtype. - Add a photo, write a brief post, select the

Learn moreoption forAdd a button (optional), fill in your URL in theLink for your buttonfield and hitPublish. - Do the same for pages that link to the page you want Google to index.

10. Automatic indexing via the Google Indexing API

Websites that have many short-lived pages, such as job postings, event announcements, or livestream videos, can use Google’s Indexing API to automatically request them to crawl and index new content and content changes. Because it allows you to push individual URLs it's an efficient way for Google to keep their index fresh.

With the Indexing API, you can

- Update a URL: notify Google of a new or updated URL to crawl

- Remove a URL: notify Google that you have removed an outdated page from your website

- Get the status of a request: see when Google crawled the URL the last time

Although Google doesn't recommend you feed them other content types than jobs and events, I have managed to index regular pages using the API. One thing I've noticed is the API seems to work better for new pages rather than re-indexing. Google might enforce this at some point but for now it's working fine. RankMath has a plugin which can makes the job a lot easier, but requires a bit of setup.

11. Leverage internal links and avoid using nofollow

Internal linksInternal links

Hyperlinks that link to subpages within a domain are described as "internal links". With internal links the linking power of the homepage can be better distributed across directories. Also, search engines and users can find content more easily.

Learn more play a huge role in making Google understand the topics of your website and its inner hierarchy. By implementing strategically placed internal links, you will make it easier for Google to understand what your content is about and how it helps users.

Make sure that you avoid using the rel=”nofollow” attribute on your internal links, as the nofollow attribute value signals to Google that it shouldn’t follow the link to the target URL. This results in no link value being passed as well.

If you need new pages indexed fast, be strategic about how you internally link to them.

Adding internal links on your home page and site-wide areas like the header and footer will significantly speed up the process of crawling and indexing.

Consider creating dynamic areas on your home page that shows your latest content, whether that's a blog post, news article, or product.

You can also use links within a mega menu that list the latest URLs within your site's different taxonomies.

12. Build relevant backlinks to your content

It’s not an overstatement to say that link building is one of the most important disciplines in this field. The general consensus is that links contribute by more than 50 percent to your SEO success.

Via inbound links, often called backlinksBacklinks

Backlinks are links from outside domains that point to pages on your domain; essentially linking back from their domain to yours.

Learn more, Google can discover your website. And as links also transfer a portion of their authority, you will get indexed faster if a backlink is coming from a high-authority website, and it will significantly affect your rankingsRankings

Rankings in SEO refers to a website’s position in the search engine results page.

Learn more.

To help you boost your indexing and ranking options, here are a whole list of highly effective link building strategies.

13. Create buzz around your content on social media

Earlier in this article, we mentioned that Google has become much stricter when it comes to what content they index. When you create buzz around your content on social media, it signals to Google that the content is popular, which speeds up the indexing process. For instance, posting your content on Twitter together with a few popular hashtags can really help in speeding up the indexing process.

On top of that, creating buzz around your content will also lead to newsletter inclusions and backlinks!

Because of Google's access to Twitter's "firehose data stream" , you'll find that all content types — but especially news content — will be discovered quickly if it gets shared on Twitter.

Conclusion

Getting your website indexed properly by Google can turn out to be a hell of a job. You have to tackle many technical as well as content-oriented and PR-based challenges. And since the Google core update in May 2020, indexing new pages has only become even harder.

But with a proper strategy and checklist in place, you can get Google to index the most important parts of your website and boost your SEO performance with high rankings.